Adding Mistral OCR to LiteLLM

Let's combine a European AI powerhouse and the best AI model proxy and see how they can interact.

Prelude

I've been recently playing around with AI a lot. To get a solid grasp of the providers on the market, the performance of selfhosting, understanding the concepts of MCP, RAG, prompt engineering and so forth.

Another thing that's recently on my radar as well is consciously choosing for European technology. I believe with everything going on right now in the world and upcoming strong innovation happening in the EU tech scene this is the right bet to take.

So, let's combine those two and look into how you can integrate Mistral's new OCR feature (a European AI provider) together with LiteLLM (a selfhosted model gateway).

Introduction

Combining OCR with LLMs, such as passing Mistral OCR results to Mistral Small, opens up interesting use cases.

For instance, you can extract text from receipts or long white paper PDFs and then analyze or generate insights using that LLM. This combination is particularly useful for automating data extraction, enhancing document processing workflows, and enabling advanced text analysis from visual content. I'll take you through some of the details on how to set up Mistral's relatively new OCR feature in LiteLLM.

Having LiteLLM is a real blessing because you can have a single gateway managing all your models (not clicking around on different websites or API endpoints) and get usage & billing insights per platform consuming your gateway (e.g Open WebUI, Bruno, your Open AI compatible app...)

Adding the Mistral OCR Passthrough Route

To integrate Mistral OCR with LiteLLM, the first step is to configure a passthrough route in LiteLLM. This route will allow LiteLLM to communicate with the Mistral OCR service by just relaying the request directly. That means that LiteLLM does not actually transform or dictate any data but really acts as a proxy just 1:1 passing through the request to Mistral's endpoint.

This configuration cannot be done through the LiteLLM UI, you will need to modify the YAML configuration file directly. That's a bit of a bummer and I also don't understand why this isn't possible, but hey, might come in a later release.

In LiteLLM's config.yaml add the following pass_through_endpoints configuration under the general_settings:

general_settings:

pass_through_endpoints:

- path: "/mistral/v1/ocr"

target: "https://api.mistral.ai/v1/ocr"

headers:

Authorization: "bearer os.environ/MISTRAL_API_KEY"

content-type: application/json

accept: application/json

forward_headers: TrueWe don't want to hardcode our Mistral API key so passed it as an environment variable. Make sure that however you're running LiteLLM you've set the env var. I typically run everything in Docker Compose, and provide that as a litellm.env file to my Compose config's env_file directive:

DATABASE_URL="postgresql://llmproxy:*****@litellm_db:5432/litellm"

STORE_MODEL_IN_DB=True

MASTER_KEY="*******"

POSTGRES_DB=litellm

POSTGRES_USER=llmproxy

POSTGRES_PASSWORD=******

# Ollama

OLLAMA_API_BASE=http://******:11434

OLLAMA_API_KEY=""

+MISTRAL_API_KEY=********Calling the Mistral OCR API

Once you have configured the passthrough route and restarted LiteLLM, you can start calling the Mistral OCR API through LiteLLM. Below are examples of how to make API calls.

Example 1: Image OCR Request

To send an image for OCR processing, you can use the following curl command:

$ curl --request POST \

--url http://litellmhost.local:4000/mistral/v1/ocr \

--header 'authorization: Bearer LITELLM_API_KEY' \

--header 'content-type: application/json' \

--data '{

"model": "mistral-ocr-latest",

"document": {

"image_url": "https://raw.githubusercontent.com/mistralai/cookbook/refs/heads/main/mistral/ocr/receipt.png"

}

}'- Replace the LiteLLM host with your host

- Make sure you provide a LiteLLM API key (not a Mistral API key) to the Authorization header

- Pick your image URI or base64 encoded image string

Example response:

{

"pages": [

{

"index": 0,

"markdown": "# PLACE FACE UP ON DASH <br> CITY OF PALO ALTO <br> NOT VALID FOR ONSTREET PARKING \n\nExpiration Date/Time 11:59 PM\n\nAUG 19, 2024\n\nPurchase Date/Time: 01:34pm Aug 19, 2024\nTotal Due: $\\$ 15.00$\nRate: Daily Parking\nTotal Paid: $\\$ 15.00$\nPmt Type: CC (Swipe)\nTicket \\#: 00005883\nS/N \\#: 520117260957\nSetting: Permit Machines\nMach Name: Civic Center\n\\#*****-1224, Visa\nDISPLAY FACE UP ON DASH\n\nPERMIT EXPIRES\nAT MIDNIGHT",

"images": [],

"dimensions": {

"dpi": 200,

"height": 3210,

"width": 1806

}

}

],

"model": "mistral-ocr-2503-completion",

"usage_info": {

"pages_processed": 1,

"doc_size_bytes": 3110191

}

}Example 2: OCR Request for PDFs

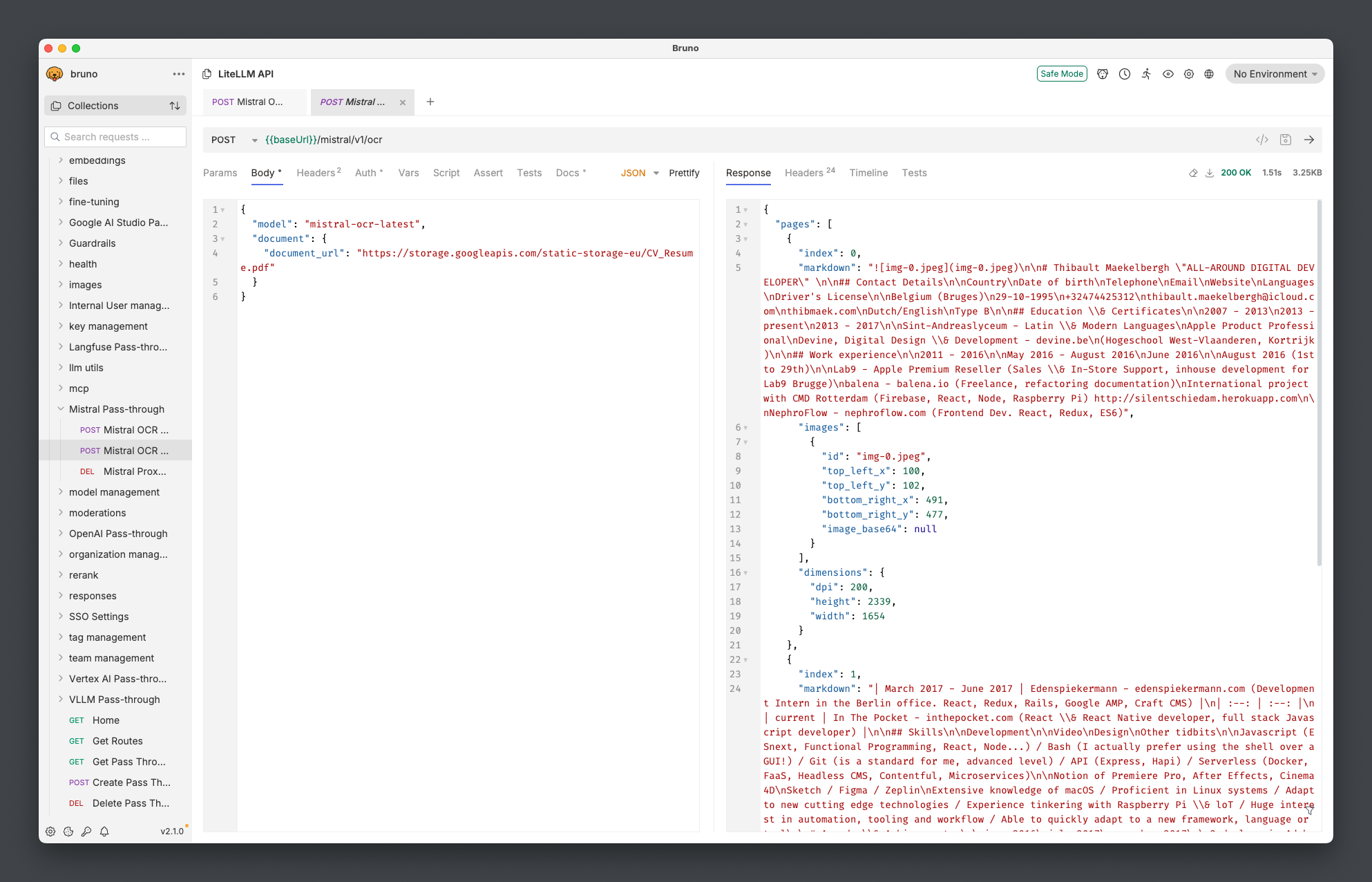

OCR'ing a PDF also also straight forward but uses another request body format. I've provided a screenshot here of Bruno that shows the request format & response to OCR my public resume:

Current limitations

While integrating Mistral OCR with LiteLLM offers several benefits (unified interface, scalability, single source of truth), there are still some areas that need improvement IMO to make this setup truly compelling. The most important one being cost monitoring.

Conclusion

Integrating Mistral OCR with LiteLLM offers a streamlined approach to managing OCR tasks within a unified AI model gateway.

While the current passthrough API setup has limitations, particularly in cost monitoring, the benefits of a centralized interface and enhanced functionality make it a valuable addition. Next steps would definitely be to look how you can integrate Mistral OCR with a Mistral model like Pixtral or Small to do actual processing.

I'm thinking of integrating those Mistral LLM models via LiteLLM to:

- OCR PDFs and give me TLDR summaries

- Create visuals out of PDF white papers

- Perform OCR and straight map that to JSON or other formats using Structured Output so a backend or other API receives it in the correct format